Project Overview

The purpose of this project is to draw insights from customer reviews to provide Amazon sellers and marketers ways to improve their products and marketing.

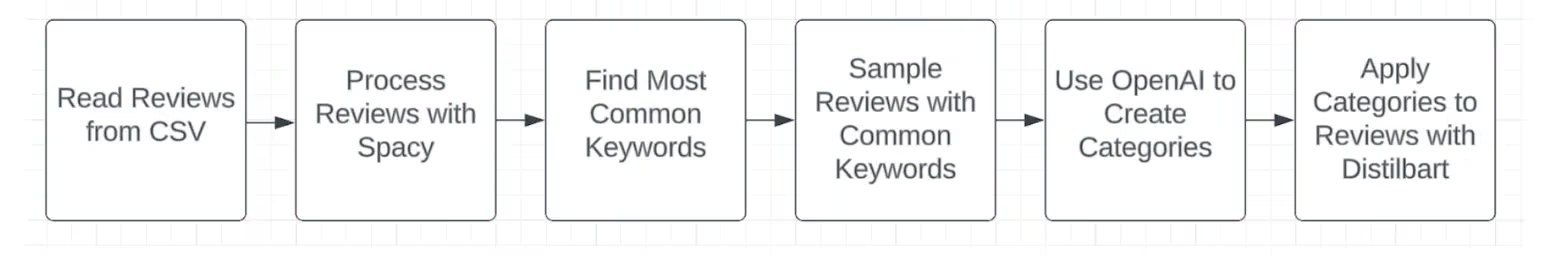

This project uses a specific type of machine learning called Natural Language Processing (NLP) to assign categories to reviews so that sellers can understand how customers feel about their products without having to read every review.

Three major NLP tools are used in this project - Spacy, OpenAI, and Distilbart.

Using NLP to Process Reviews

Using machine learning tools requires balancing output quality, performance speed, and cost. OpenAI's models are some of the best, however, using them can be expensive at scale, so I'll use free NLP tools for simpler tasks. Spacy processes the reviews prior to sending them to OpenAI's models for categories, and Distilbart applies those categories to the reviews. Both Spacy and Distilbart are free.

Data Sources

I sourced these Amazon reviews from Rapid API, which has lots of API for all sorts of things like Google Maps data, Yelp data, news data, social media data, and all sorts of data that you could scrape.

The GDELT Project is an initiative to construct a catalog of human societal-scale behavior and beliefs across all countries of the world. It's basically a giant database of political events that is updated every 15 minutes with links to news articles. I've been thinking about applying these machine learning ideas to news data, and this might be an interesting project for anyone who wants to dive into machine learning.

https://rapidapi.com/

https://www.gdeltproject.org/

Natural Language Processing - https://www.ibm.com/topics/natural-language-processing

The field of artificial intelligence concerned with giving computers the ability to understand text and spoken words in much the same way human beings can.

Speech recognition

Part of speech tagging - nouns, verbs, etc

Named entity recognition - locations, people's names

Sentiment analysis - postive, negative, angry, sad, etc

Text Summarization

Spacy - https://spacy.io/

spaCy is a free, open-source library for advanced Natural Language Processing (NLP) in Python.

spaCy is designed specifically for production use and helps you build applications that process and "understand" large volumes of text. It can be used to build information extraction or natural language understanding systems, or to pre-process text for deep learning.

I use Spacy to remove stop words and lemmatize words. Stop words are words like and, or, & the that carry little meaning by themselves. Lemmatize refers to distilling similar words down to a base word, for example, reducing "builds", "building", or "built" to the lemma "build".

Lemmatization

Reducing words to their base form. For example, "changing", "changes", "changed" all become "change".

Stop Words

Common words like "the", "a", "in" that are filtered out during text processing as they add little semantic value.

Tokenization

Breaking text into smaller units (tokens) like words, phrases, or symbols for analysis.

LangChain & Hugging Face

LangChain & Hugging Face do most of the hard work by providing abstractions that distill complex machine learning code into a few lines of easy to read Python code.

I use OpenAI's Davinci 3 model later in this project, which costs around 2 cents per 1000 tokens, including both input and output. Per OpenAI, their average token is around 4 characters.

So if you send a 400 character instruction to OpenAI and it gives you a 200 character response, you will be charged for about 150 tokens ((400+200)/4), or around 0.3 cents. While that number seems small, it adds up if you run this for millions of reviews.

Model Evaluation

You'll want to split the data that you build your models with into a training set, a validation set, and a test set.

Training Set

A training set is a subset of data used to train a model

Validation Set

Used to evaluate the results of the model for further tweaking.

Test Set

Used to test the final results of the model.

The difference between the validation set and the test set is you can tweak your model after viewing the results on the validation set, but not the test set.

The test set provides an unbiased estimate of the model's performance on new, unseen data. It assesses the model's ability to generalize beyond what it has already been exposed to.

Evaluating the Model

You want to evaluate how often the model is right and how intensely it is right or wrong. 95% success sounds good, until we're talking about the probability of an airplane landing. Loss functions are useful for penalizing your model for bad results.